Tensorrt加速部署

1.环境准备

1.1CUDA安装

- 安装地址

https://developer.nvidia.com/cuda-toolkit-archive,选择采用runfile(local)方法,包括所有的包,比较容易成功。 - 然后进行安装

sudo sh cuda_xxxx_linux.run - 注意要取消Driver的安装,不要把电脑显卡的驱动重新安装,是向下兼容的,安装CUDA即可。

1.1.1配置环境变量

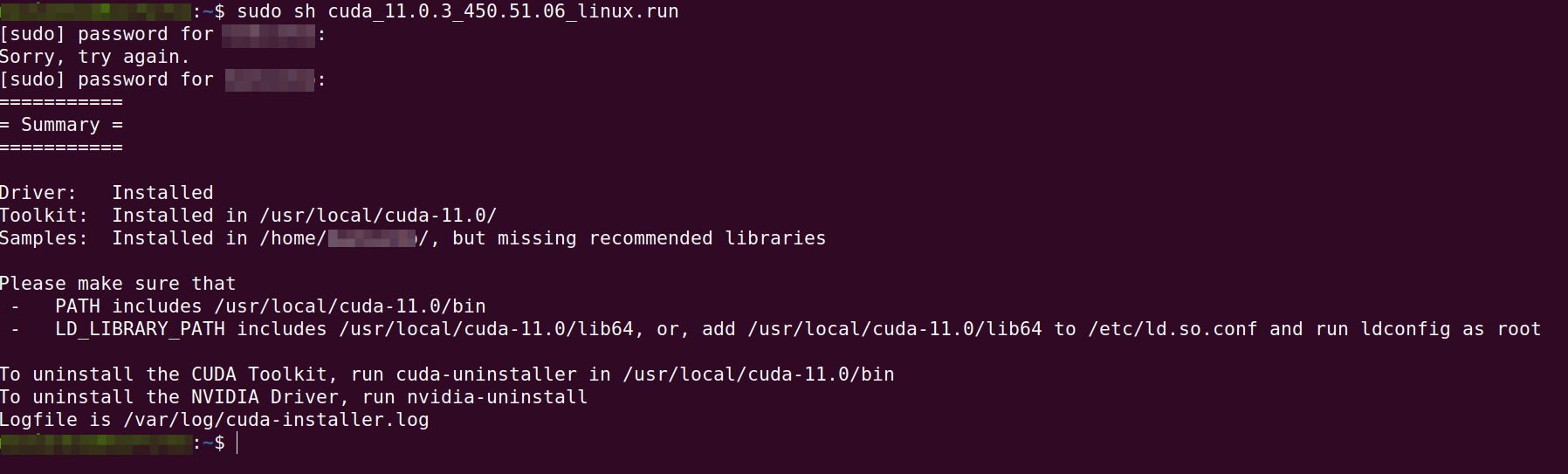

- 根据安装成功图片进行环境变量配置

sudo vim ~/.bashrc

# CUDA Soft Link

export PATH=/usr/local/cuda-11.0/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-11.0/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

# 刷新

source ~/.bashrc

- 然后验证cuda是否安装成功,输入

nvcc -V

1.2Cudnn安装

-

安装地址https://developer.nvidia.com/rdp/cudnn-download?spm=a2c4e.10696291.0.0.1df819a4HJWSTe

-

根据CUDA版本进行安装,下载第一个Linux x86_64

-

对下载的

cudnn-11.0-linux-x64-v8.0.5.39.tgz进行解压操作,得到一个文件夹cuda,命令为:tar -zxvf cudnn-11.0-linux-x64-v8.0.5.39.tgz -

然后,使用下面两条指令复制cuda文件夹下的文件到/usr/local/cuda-11.7/lib64/和/usr/local/cuda-11.7/include/中。

# 如果没有lib64,lib下目录文件复制过去即可 cp cuda/lib64/* /usr/local/cuda-11.7/lib64/ cp cuda/include/* /usr/local/cuda-11.7/include/拷贝完成后,我们可以使用如下的命令查看cuDNN的信息:

cat /usr/local/cuda-11.7/include/cudnn_version.h | grep CUDNN_MAJOR -A 2

#### 1.3安装Tensorrt

- 只需要和cuda版本匹配,下载地址https://link.zhihu.com/?target=https%3A//developer.nvidia.com/nvidia-tensorrt-8x-download

- 安装tar的GA(稳定版)

```bash

#将TensorRT压缩包解压

# tar -xzvf 文件名

#解压得到TensorRT-5.0.2.6的文件夹,将里边的lib绝对路径添加到环境变量中

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/home/lthpc/tensorrt_tar/TensorRT-5.0.2.6/lib

sudo cp -r ./lib/* /usr/lib

sudo cp -r ./include/* /usr/include

-

安装python相关库

# 安装TensorRT cd TensorRT-5.0.2.6/python pip install tensorrt-5.0.2.6-py2.py3-none-any.whl # 安装UFF,支持tensorflow模型转化 cd TensorRT-5.0.2.6/uff pip install uff-0.5.5-py2.py3-none-any.whl # 安装graphsurgeon,支持自定义结构 cd TensorRT-5.0.2.6/graphsurgeon pip install graphsurgeon-0.3.2-py2.py3-none-any.whl -

测试是否安装成功

dpkg -l | grep TensorRT # 返回 ii graphsurgeon-tf 7.1.0-1+cuda10.2 amd64 GraphSurgeon for TensorRT package ii libnvinfer-bin 7.1.0-1+cuda10.2 amd64 TensorRT binaries ii libnvinfer-dev 7.1.0-1+cuda10.2 amd64 TensorRT development libraries and headers ii libnvinfer-doc 7.1.0-1+cuda10.2 all TensorRT documentation ii libnvinfer-plugin-dev 7.1.0-1+cuda10.2 amd64 TensorRT plugin libraries ii libnvinfer-plugin7 7.1.0-1+cuda10.2 amd64 TensorRT plugin libraries ii libnvinfer-samples 7.1.0-1+cuda10.2 all TensorRT samples ii libnvinfer7 7.1.0-1+cuda10.2 amd64 TensorRT runtime libraries ii libnvonnxparsers-dev 7.1.0-1+cuda10.2 amd64 TensorRT ONNX libraries ii libnvonnxparsers7 7.1.0-1+cuda10.2 amd64 TensorRT ONNX libraries ii libnvparsers-dev 7.1.0-1+cuda10.2 amd64 TensorRT parsers libraries ii libnvparsers7 7.1.0-1+cuda10.2 amd64 TensorRT parsers libraries ii python-libnvinfer 7.1.0-1+cuda10.2 amd64 Python bindings for TensorRT ii python-libnvinfer-dev 7.1.0-1+cuda10.2 amd64 Python development package for TensorRT ii python3-libnvinfer 7.1.0-1+cuda10.2 amd64 Python 3 bindings for TensorRT ii python3-libnvinfer-dev 7.1.0-1+cuda10.2 amd64 Python 3 development package for TensorRT ii tensorrt 7.1.0.x-1+cuda10.2 amd64 Meta package of TensorRT ii uff-converter-tf 7.1.0-1+cuda10.2 amd64 UFF converter for TensorRT package # 测试 python import tensorrt import uff

1.4安装OpenCV

-

下载地址

https://opencv.org/releases/,根据cuda下载对应版本4.4.0 -

unzip opencv-4.4.0.zip sudo apt-get install cmake # 如果报错,安装缺少的依赖 sudo apt-get install build-essential libgtk2.0-dev libavcodec-dev libavformat-dev libjpeg.dev libtiff5.dev libswscale-dev libjasper-dev mkdir build cd build sudo cmake .. sudo make # 多线程加速编译 sudo make -j9 sudo make install # 配置变量,没有就新建文件 sudo vim /etc/ld.so.conf.d/opencv.conf /usr/local/lib # 刷新 sudo ldconfig # 配置变量 sudo vim /etc/bash.bashrc # 添加下面的话 PKG_CONFIG_PATH=$PKG_CONFIG_PATH:/usr/local/lib/pkgconfig export PKG_CONFIG_PATH # 刷新 source /etc/bash.bashrc source ~/.bashrc

2.转化开始

2.1转化pt模型为wts文件

- 使用tensorrtx源码,并将yolov5s.pt转为.wts模型。

- 将tensorrtx源码中的gen_wts.py复制到yolov5源码中并运行,生成.wts模型。

2.2 开始

cd tensorrtx/yolov5

mkdir build

cd build

cp yolov5s.wts .

sudo cmake ..

make

# ./yolov5_det -s [.wts] [.engine] [n/s/m/l/x/n6/s6/m6/l6/x6 or c/c6 gd gw] // serialize model to plan file

# ./yolov5_det -d [.engine] [image folder] // deserialize and run inference, the images in [image folder] will be processed.

./yolov5_det -s yolov5s.wts yolov5s.engine s

./yolov5_det -d yolov5s.engine ../images

温馨提示: 遵纪守法, 友善评论!